Can a law make social media less addictive?

New York just passed a law on "addictive" social media feeds for children, but some researchers are questioning what that actually means.

New York Governor Kathy Hochul was clear about her opinion of social media earlier this month, speaking at a press conference to announce the signing of two new state laws designed to protect under-18-year-olds from the dangers the online world.

The apps are responsible for transforming "happy-go-lucky kids into teenagers who are depressed", she said, but according to Hochul, the legislation she signed off on would help. "Today, we save our children," Hochul said. "Young people across the nation are facing a mental health crisis fuelled by addictive social media feeds."

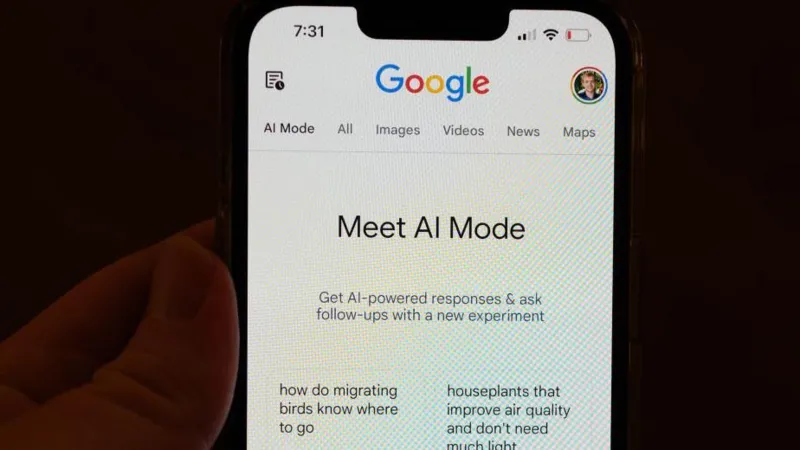

Starting in 2025, these new laws could force apps including TikTok and Instagram to send some children back to the earliest days of social media, before content was tailored by users' "likes" and tech giants collected data about our interests, moods, habits and more. The Stop Addictive Feeds Exploitation (SAFE) for Kids Act requires social media platforms and app stores seek parental consent before children under 18 use apps with "addictive feeds", a groundbreaking attempt to regulate algorithmic recommendations. The SAFE Act will even prevent apps from sending notifications to child or teenage users between midnight and 6am – practically a legal bedtime for devices – and require better age verification to avoid children slipping through undetected. The second law, the New York Child Data Protection Act, limits the information app providers collect about their users.

"By reining in addictive feeds and shielding kids' personal data, we'll provide a safer digital environment, give parents more peace of mind, and create a brighter future for young people across New York," Hochul explained.

New "Teen Accounts" for Instagram

On 17 September, Meta announced a package of sweeping changes for young Instagram users, meant to address parents' top concerns about children's safety online. Some observers say the move may be an attempt to get out in front of regulatory efforts.

In addition to new parental controls, accounts belonging to minors will be set to private by default, messages will be restricted to prevent contact from strangers and Instagram will impose controls to limit sensitive content. Teens will also be nudged to leave the app after an hour of usage, and a "sleep mode" will disable notifications between 10pm and 7am.

The laws are part of growing concerns over the effects of social media on the mental health of young people. US Surgeon General Vivek Murthy recently went as far as calling for warning labels for social media apps, similar to the notices on cigarette packaging. In the US and many parts of the world, young people are facing a mental health crisis, and even big tech employees have acknowledged the harms they've caused some children.

But the science linking social media and mental health problems is far less clear than many assume. In fact, numerous studies have even shown social media can have benefits for teenagers' mental health. It's led some technology analysts and child psychologists to call recent political interventions a "moral panic".

Some policy advocates and social media experts also question how easy legislative interventions like the SAFE Act will be to enforce. They say it could set back the much-needed efforts to address the real hazards of social media, such as child sexual abuse material, privacy violations, hate speech, misinformation, dangerous and illegal content and more.

Mixed messages

Many studies that do find a link with poor mental health outcomes focus on "problematic social media use", where individuals have a lack of regulation over their use of social media. This has been associated with increased prevalence of various forms of anxiety, for example, but also depression and stress. Some studies suggest there is a dose-related aspect at work, where negative mental health symptoms increase with time spent on social media. But other studies suggest such associations are weak or have even found no evidence that pins the spread of social media to widespread psychological problems.

There are some studies that even suggest social media use in moderation can be beneficial in some circumstances by helping to create a sense of community.

Indeed, the US Surgeon General's own advisory on the impact of tech on young people suggests that it has as many positive benefits as negatives. According to the Surgeon General's report, 58% of young people said social media helped them feel more accepted, and 80% praised its ability to connect people with their friends' lives.

And there is even debate about whether problematic social media use is a growing problem at all. One recent meta-analysis of 139 studies from 32 countries concluded that there was no sign it had increased over the past seven years, apart from in low income countries where the prevalence of mental health conditions tends to be higher.

One problem that crops up regularly is that many of the studies in this area rely upon self-reported mood and usage patterns, which can lead to bias in the data, but also use such a wide variety of methods that they are not easily compared.

But this uncertainty in the science hasn't stopped the drumbeat of concern among child-protection campaigners and legislators. They argue that a precautionary principle is prudent and that more needs to be done to force big tech platforms to take action, with the two new pieces of legislation introduced by Hochul being just the latest move.

"There's a real sense of urgency about it all, that we must seen to be doing something right now this minute to fix the problem," says Pete Etchells, professor of psychology and science communication at Bath Spa University, and the author of Unlocked: The Real Science of Screen Time.

"But just because it feels like an urgent problem to fix doesn't mean that the first solution that comes along would actually work."

Mixed responses

Some experts in online safety have welcomed the new laws in New York.

"While New York's legislation is much broader and less targeted on concrete harms than the UK's Online Safety Act, it's clear that regulation is the only way that big tech will clean up its algorithms and stop children being recommended huge amounts of harmful suicide and self-harm content," says Andy Burrows, an advisor at the Molly Rose Foundation, set up by the parents of Molly Russell, a UK teenager who killed herself in 2017 after seeing a series of self-harm images on social media – a contributing factor to her death, according to a landmark ruling in 2022 by a London coroner.

Burrows says Hochul's swift actions should be seen favourably compared to the US Congress, which he claims "drags its feet on passing comprehensive federal measures".

"The bar is quite low and this legislation only stands out as better compared to the numerous pieces of bad legislation out there," says Jess Maddox, assistant professor in digital media at the University of Alabama. "In terms of states in the US trying to regulate social media, this is some of the better attempts I've seen."

She praises it for not preventing minors from using social media outright, as a similar plan in Florida is attempting – a measure some worry will cause digital literacy issues and leave children less prepared for the future. "This does put the onus on social media platforms to do something," says Maddox.

The response from social media platforms themselves has been mixed. Netchoice, an industry body that represents many of the major technology companies including Google, X, Meta and Snap, described the New York legislation as "unconstitutional" and heavy handed. It warned the laws could even have unintended consequences such as potentially increasing the risk of children being exposed to harmful content by removing the ability to curate feeds and presenting possible privacy issues.

But a spokesperson for Meta, which runs Facebook, Instagram and WhatsApp, said: "While we don't agree with every aspect of these bills, we welcome New York becoming the first state to pass legislation recognising the responsibility of app stores." They pointed to research suggesting the majority of parents support legislation requiring app stores to obtain parental approval, and added: "We will continue to work with policymakers in New York and elsewhere to advance this approach."

X, TikTok, Apple and Google, YouTube's parent company, did not respond to the BBC's request to comment for this story.

Laws mandating parental consent on social media have also faced legal hurdles. In February, a federal judge upheld a block on an Ohio law that required parents' permission before kids under 16 use social media.

When the SAFE Act faces inevitable scrutiny, the debate around the science could further dampen it's viability, says Ysabel Gerrard, senior lecturer in digital communication at the University of Sheffield in the UK, who has been studying the online safety movement. "It takes as its premise that social media 'addiction' is a proven phenomenon, but it isn't," she says. "While there's consensus that platforms are, by their very design, and as profit-seeking endeavours, aimed to be enjoyable for their users and to retain their attention, whether that should be classed as 'addiction' is still under debate."

But Gerrard says the second piece of legislation, the New York Child Data Protection Act is stronger. "I've long worried about the loss of control children – well, all of us – have over their data and the lack of knowledge we all have about where it goes," she says. She believes the law will require platforms to explain where they're using the data they're collecting, which would be a sea change. "While I entirely agree with the principles behind this Act, I will be interested to see how it evolves, as it would require platforms to do something they haven't yet managed, and to society's detriment."

Sam Spokony, a representative for Governor Hochul, declined to comment when contacted for a response to the criticism.

Enforcement troubles

There are those who also fear that the wrong approach to regulating social media platforms could ultimately have longer-term consequences.

While Maddox praises the acts for being better than some other state-level attempts, "this is where my praise ends as it seems largely unenforceable", she says. She points out that it's difficult to end "addictive feeds" in a single state, and compares it to online age verification laws that have effectively banned access to pornographic websites on a state-by-state basis in the US.

One concern is that verifying that social media feeds have been made less addictive once the law goes into effect is going to be difficult. That in itself will make it hard to enforce.

"In being unenforceable, I could see social media companies pointing to this as evidence they can't or shouldn't be regulated," says Maddox.

Another challenge is the many different approaches being taken by different states to regulate children's use of social media. Social media networks often transcend not just state boundaries but also international borders. Many key policy makers are sympathetic to the difficulty of implementing differing local restrictions. And already, this mixture of local laws has given social media companies room to challenge the legislation through the courts in places including Ohio, California, Arkansas.

Maddox fears that if such laws are rushed into place, it can do more harm than good in attempts to protect children online than legislation that has had time to be properly scrutinised.

"For the short term, we may have done something, but in the long term, nothing will likely happen," she says.

She's not alone in that fear. "I worry that folks in power are wasting precious time on something that is unenforceable," says Gerrard.

So do critics of the new legislation see a better alternative? "Clearly in the long run it will be much better for all involved – and I think that also includes the tech companies – to have a single, well developed federal approach rather than a patchwork of 50 states taking separate approaches," says Burrows.

A unified, single approach that is based on evidence, and acts as a global standard would be preferable, say the experts, and the technology industry tends to agree. The nascent world of AI regulation offers models that could be adopted to social media as well. For example, lawmakers are fighting to mandate public algorithm audits to make companies open their AI systems to outside experts. However, it is an approach that still may require a global consensus: the UK, for instance, asks AI model makers to submit their products for analysis by its AI oversight body, but a number of firms have said they wouldn't do so because the jurisdiction is comparatively small.

In the meantime, individual states are pushing ahead with their attempts to protect children from what they might see and feel while using social media, while big tech also is pushing back. It seems clear that the war over the future of social media is only just getting started.

Source: BBC